Introduction

Hybrid cooling models can help tackle the rising demands of AI compute. The world of Artificial Intelligence is experiencing unprecedented growth, with computational demands doubling every few months. This exponential increase presents significant challenges. “Good enough” is no longer sufficient when it comes to infrastructure, hardware, and software solutions for AI workloads. The traditional approaches are quickly becoming inadequate, leading to escalating costs, infrastructure bottlenecks, and performance limitations that hinder innovation and slow down progress.

Meeting these demands requires a new way of thinking. Optimizing AI workloads involves a strategic combination of diverse hardware and software approaches, each playing a specific role in the overall ecosystem. This holistic approach considers the entire AI lifecycle, from data ingestion and model training to inference and deployment, to ensure optimal performance and resource utilization. It moves beyond the limitations of traditional, one-size-fits-all solutions and embraces a more nuanced and flexible approach.

This article argues that engineering a “best of both worlds” strategy is not merely beneficial but absolutely crucial for maximizing AI performance, reducing operational costs, and achieving sustainable growth. By strategically leveraging the strengths of different hardware architectures, software frameworks, and infrastructure models, organizations can unlock new levels of efficiency and scalability in their AI initiatives. This approach is essential for staying competitive in the rapidly evolving landscape of AI and realizing the full potential of this transformative technology.

Understanding the Landscape

The AI lifecycle is comprised of distinct phases, each presenting unique computational demands. Effectively addressing these varying needs is critical for optimizing resource allocation and overall performance. Data ingestion, for instance, often relies on robust CPUs for efficient preprocessing and data manipulation. These tasks, while not as computationally intensive as deep learning, are crucial for preparing the data for subsequent stages. Therefore, a balanced approach that recognizes the importance of CPU capabilities during this initial phase is paramount.

However, when it comes to model training, the paradigm shifts dramatically. This phase demands massive parallel processing capabilities, making GPUs the undisputed champions. Their architecture, designed for handling thousands of concurrent operations, excels at the matrix multiplications and other linear algebra operations that underpin deep learning algorithms.

Furthermore, the emergence of specialized hardware accelerators, such as TPUs and FPGAs, offers even greater potential for certain AI workloads. TPUs, custom-designed by Google, are optimized for TensorFlow and demonstrate exceptional performance in specific neural network architectures. Meanwhile, FPGAs provide a flexible, reconfigurable platform that can be tailored to accelerate a wide range of AI tasks.

Ultimately, the selection of the right hardware hinges on the specific requirements of each stage in the AI pipeline. Organizations are increasingly adopting *hybrid cooling models* to efficiently manage the heat generated by these diverse hardware components. This necessitates a thorough understanding of the computational characteristics of each task and a strategic allocation of resources to maximize efficiency and minimize costs.

Software Optimization Is Key

Of course, here is the new section:

The pursuit of optimal AI workload performance extends far beyond the selection of cutting-edge hardware. Software optimization is a critical component that can unlock the full potential of your infrastructure and significantly impact overall efficiency. Think of it as fine-tuning an engine: even the most powerful engine will underperform without precise calibration and efficient fuel delivery. In the context of AI, this calibration involves selecting the right frameworks and libraries, optimizing algorithms, and ensuring efficient resource utilization.

Leveraging Software Frameworks and Libraries

The AI landscape is rich with powerful software frameworks and libraries, each offering unique strengths and capabilities. TensorFlow and PyTorch, for example, are dominant forces in deep learning, providing comprehensive tools for building and training neural networks. Scikit-learn offers a wealth of algorithms for classical machine learning tasks such as classification, regression, and clustering. Choosing the right framework for your specific needs is the first step. However, simply selecting a framework isn’t enough.

You must leverage its features effectively. This includes using optimized routines, taking advantage of hardware acceleration capabilities (e.g. GPU support), and understanding the framework’s memory management strategies. Furthermore, staying up-to-date with the latest versions and patches is crucial, as these often include performance improvements and bug fixes.

Algorithm Optimization Techniques

Beyond frameworks and libraries, algorithmic efficiency plays a pivotal role. Seemingly small improvements in algorithm design can lead to substantial gains in performance, especially when dealing with large datasets or complex models.

Techniques like pruning (removing unnecessary connections in a neural network), quantization (reducing the precision of numerical representations), and knowledge distillation (transferring knowledge from a larger model to a smaller one) can significantly reduce computational requirements without sacrificing accuracy. Moreover, consider using optimized libraries that implement common algorithms more efficiently.

For instance, libraries like cuBLAS and cuDNN provide highly optimized implementations of linear algebra and deep learning primitives for NVIDIA GPUs, unlocking significant performance gains. Ultimately, the choice of algorithm and its implementation should be carefully considered in light of the available hardware resources and the specific requirements of the AI workload.

This may involve experimenting with different algorithms, profiling their performance, and iteratively refining the implementation to achieve optimal results. Moreover, effective temperature control is essential, and *hybrid cooling models* provide a range of solutions to help maintain optimal algorithmic efficiency.

The Power of Parallelism

Distributed training is essential for scaling AI models to handle massive datasets and complex architectures. There are several strategies to achieve this, each with its trade-offs. Data parallelism involves distributing the training data across multiple nodes, with each node holding a complete copy of the model.

Model parallelism, on the other hand, divides the model itself across different nodes, which is beneficial for models that are too large to fit on a single device. Pipeline parallelism further refines this by partitioning the model into stages and assigning each stage to a different node, allowing for concurrent processing of different data batches. The selection of which type of parallelism should be used depends on the workload type, and the data set being used.

Efficient communication between nodes is paramount in distributed training. The overhead of transferring gradients and parameters can quickly become a bottleneck if not managed correctly. Technologies like Remote Direct Memory Access (RDMA) and optimized communication libraries play a crucial role in minimizing latency and maximizing bandwidth. Careful consideration must be given to the network topology and the communication patterns of the distributed training framework to ensure optimal performance.

Several frameworks facilitate distributed training, including Horovod, Ray, and PyTorch’s DistributedDataParallel (DDP). Horovod, for example, is known for its simplicity and compatibility with various deep learning frameworks. Ray is a more general-purpose distributed computing framework that can be used for a wide range of AI workloads, including reinforcement learning and hyperparameter tuning.

DDP, integrated directly into PyTorch, provides a convenient way to parallelize training across multiple GPUs or nodes. Cloud platforms offer managed services that further simplify distributed training by providing pre-configured environments and optimized infrastructure. These environments make it easier for data scientists to leverage advanced hybrid cooling models that are available.

| Parallelism Type | Description | Suitable For |

|---|---|---|

| Data Parallelism | Distribute data across nodes | Large datasets, smaller models |

| Model Parallelism | Distribute model across nodes | Large models, limited GPU memory |

| Pipeline Parallelism | Divide model into stages | Complex models with sequential operations |

Orchestration and Automation

Containerization, particularly with Docker, has become a cornerstone of modern AI application deployment. Docker allows data scientists and engineers to package their AI models, dependencies, and configurations into lightweight, portable containers. This ensures consistency across different environments, from development to testing to production, eliminating the “it works on my machine” problem that plagues many software projects.

Furthermore, containerization simplifies deployment, enabling faster iteration cycles and more reliable releases of AI-powered applications. This approach streamlines the software development lifecycle and allows teams to focus more on model development and less on environment-specific configurations.

Kubernetes then takes these containerized AI workloads and orchestrates them at scale. It acts as a central management system, automating tasks such as deployment, scaling, and monitoring. Kubernetes provides features like resource management, scheduling, and fault tolerance.

This is particularly critical for AI applications that often require significant computational resources and high availability. For example, Kubernetes can automatically scale the number of GPU-backed containers based on the incoming request volume for an image recognition service, ensuring optimal performance even during peak periods. Popular platforms like Kubeflow, built on top of Kubernetes, provide specialized tools and workflows for managing machine learning pipelines, further streamlining the development and deployment process.

The marriage of containerization and orchestration offers significant advantages in the AI landscape. Containerization ensures consistent deployment across different environments, while Kubernetes automates the management and scaling of containerized AI workloads. This combination allows data scientists and engineers to focus on building and improving AI models, rather than worrying about the complexities of infrastructure management.

This optimized and automated environment improves resource utilization, reduces operational overhead, and ultimately accelerates the delivery of AI-powered solutions. Utilizing hybrid cooling models for the underlying infrastructure can further enhance the performance and reliability of these containerized and orchestrated AI workloads.

| Technology | Benefit |

|---|---|

| Docker | Consistent environment, simplified deployment |

| Kubernetes | Automated scaling, resource management, fault tolerance |

| Kubeflow | Specialized tools for ML pipelines |

Cloud vs on-Premise vs Hybrid

The rise of AI and machine learning has presented organizations with a pivotal decision: where to host their increasingly demanding workloads. The traditional on-premise infrastructure, with its dedicated hardware and controlled environment, offers benefits like data security and compliance adherence. However, it often struggles to scale quickly and efficiently to meet the dynamic needs of AI development and deployment.

Conversely, the cloud offers seemingly limitless scalability and a pay-as-you-go model, making it attractive for rapidly evolving AI projects. But concerns around data sovereignty, vendor lock-in, and unpredictable costs can be significant drawbacks. This leaves many organizations seeking a middle ground.

A hybrid approach, strategically blending on-premise and cloud resources, allows organizations to leverage the strengths of both. For instance, sensitive data requiring strict compliance can remain securely within the on-premise environment, while computationally intensive model training can be offloaded to the cloud’s vast GPU resources. This careful orchestration of resources demands robust management tools and a deep understanding of workload characteristics.

The key to success lies in identifying which components of the AI pipeline are best suited for each environment. Data ingestion and preprocessing might occur on-premise, while model training and inference are executed in the cloud.

Choosing the optimal infrastructure model is not a one-size-fits-all solution. It requires a thorough evaluation of factors such as workload requirements, security constraints, budget limitations, and long-term strategic goals. Organizations must carefully assess their existing infrastructure, data governance policies, and internal expertise to determine the most appropriate deployment strategy.

Furthermore, the optimal model may evolve over time as AI projects mature and business needs change. Therefore, a flexible and adaptable approach is essential for navigating the complex landscape of AI infrastructure.

Cooling Considerations for Peak Performance

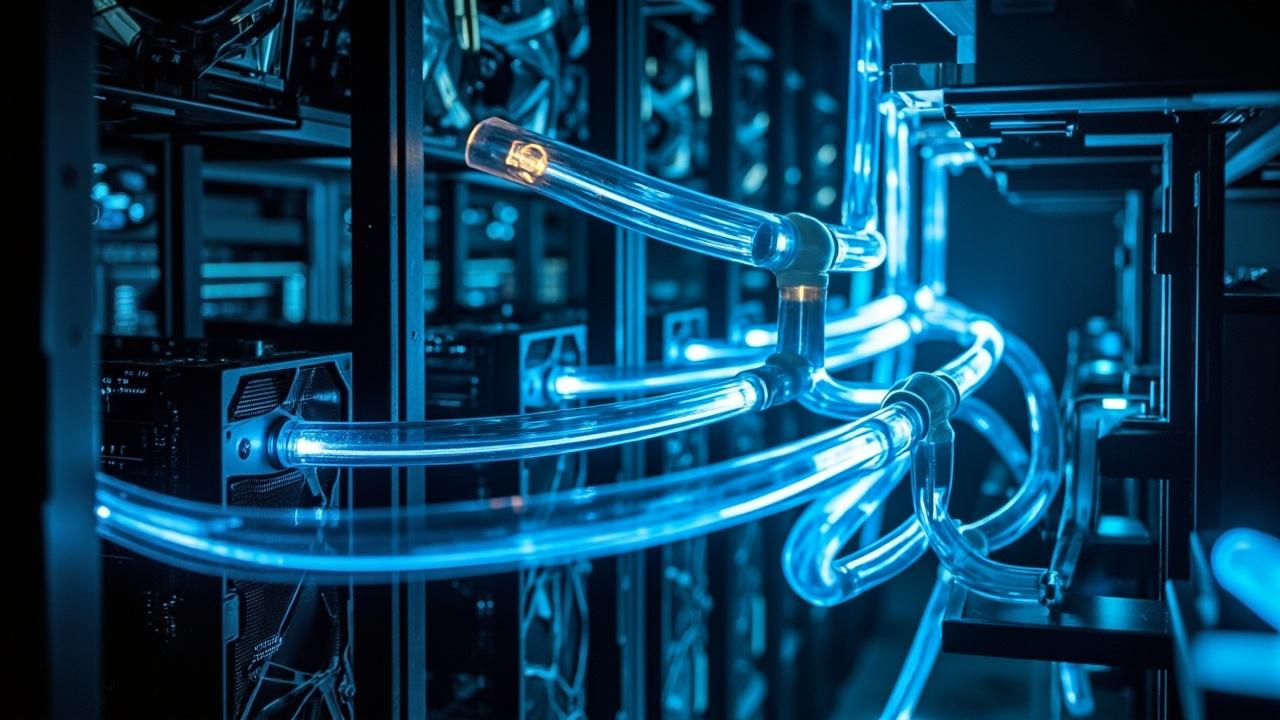

The relentless demands of AI workloads place immense strain on hardware, leading to significant heat generation. Without effective cooling, components can overheat, triggering performance throttling and ultimately reducing efficiency. Maintaining optimal operating temperatures is therefore paramount to unlocking the full potential of AI infrastructure. Neglecting this critical aspect can result in wasted resources, prolonged training times, and even hardware failure. This makes the selection and implementation of appropriate cooling solutions an essential consideration when designing and deploying AI systems.

Different cooling technologies offer varying levels of effectiveness and cost. Air cooling, the most traditional method, utilizes fans and heatsinks to dissipate heat from components. While relatively inexpensive and easy to implement, air cooling’s effectiveness diminishes as power densities increase.

Liquid cooling, on the other hand, uses a circulating liquid (typically water or a specialized coolant) to absorb and transport heat away from components. Liquid cooling offers superior heat dissipation capabilities compared to air cooling but comes with higher costs and increased complexity. Immersion cooling, an even more advanced technique, involves submerging hardware directly in a dielectric fluid, providing exceptional cooling performance but requiring specialized infrastructure.

For many organizations, the optimal solution lies in the strategic application of *hybrid cooling models*. This involves combining different cooling technologies to address the specific thermal requirements of various components. For example, CPUs, which typically generate less heat than GPUs, might be adequately cooled with air cooling, while power-hungry GPUs could benefit from liquid cooling.

Such a *hybrid cooling models* approach allows for a more targeted and cost-effective solution compared to implementing a uniform cooling strategy across the entire system. A good example would be:

- Utilizing direct-to-chip liquid cooling for high-powered GPUs.

- Employing rear door heat exchangers to further cool exhaust air.

- Using traditional air cooling methods for lower-powered components like RAM and storage drives.

Furthermore, monitoring temperature and power consumption in real-time is crucial for optimizing cooling strategies. Analyzing this data allows administrators to identify hotspots, adjust fan speeds, and proactively address potential thermal issues before they impact performance.

Case Studies

Showcase examples of organizations that have successfully optimized their AI workloads using a combination of hardware, software, and infrastructure strategies. Each real-world case study unveils practical applications of the ‘best of both worlds’ approach to AI workload optimization. These examples will demonstrate how strategic hardware, software, and infrastructure choices lead to tangible benefits.

Financial Services: Fraud Detection Acceleration

A leading financial institution struggled with the computational demands of its fraud detection models. Initially, their reliance on CPU-based infrastructure resulted in long processing times, hindering their ability to identify and prevent fraudulent transactions in real-time. By strategically incorporating GPUs for the computationally intensive model training and inference phases, they significantly accelerated the entire process.

Further optimizations were achieved by leveraging optimized deep learning frameworks and libraries, reducing training times by a factor of 10. They initially experienced high temperatures with their GPU usage, and successfully solved it by implementing hybrid cooling models to improve performance. The outcome was a substantial reduction in fraudulent activities and improved customer satisfaction.

Healthcare: Drug Discovery and Development

A pharmaceutical company faced challenges in accelerating its drug discovery pipeline. The simulation of molecular interactions and the analysis of large genomic datasets proved computationally prohibitive with their existing infrastructure. To address this, they adopted a hybrid cloud approach, leveraging cloud-based GPUs for model training and on-premise infrastructure for sensitive data storage and processing.

Furthermore, they embraced containerization and Kubernetes to streamline deployment and scaling of their AI applications. This allowed them to reduce the time required to identify potential drug candidates and accelerate the overall drug development process, resulting in significant cost savings and faster time-to-market.

E-Commerce: Personalized Recommendation Engines

A large e-commerce retailer aimed to enhance its personalized recommendation engine to improve customer engagement and drive sales. They faced the challenge of processing vast amounts of user data and generating real-time recommendations with low latency. To overcome this, they implemented a distributed training strategy using data parallelism, enabling them to train their models on a cluster of GPUs.

They also optimized their model architectures using techniques such as pruning and quantization, which reduced the model size and improved inference speed. This resulted in a significant increase in click-through rates, higher conversion rates, and improved customer lifetime value.

Future Trends

The rapid evolution of AI is not only driving advancements in algorithms and models but also pushing the boundaries of computing infrastructure. As we look to the future, several exciting trends promise to revolutionize how we approach AI workload optimization. Quantum computing, while still in its early stages, holds the potential to solve currently intractable problems and unlock new possibilities for AI.

Similarly, neuromorphic computing, which mimics the structure and function of the human brain, offers the promise of energy-efficient and highly parallel processing for certain AI tasks. Furthermore, the rise of edge AI is bringing computation closer to the data source, enabling real-time decision-making and reducing latency in applications such as autonomous vehicles and IoT devices.

AI-powered optimization tools are also poised to play a significant role in automating workload management. These tools can analyze performance data, identify bottlenecks, and dynamically adjust resource allocation to optimize efficiency. For instance, they can automatically select the most appropriate hardware (CPU, GPU, or specialized accelerator) for a given task, adjust batch sizes during training, or optimize data placement for faster access.

Such automation can significantly reduce the burden on IT teams and enable them to focus on higher-level strategic initiatives. Advancements in monitoring technologies will also enable a better understanding of the performance and utilization of AI infrastructure.

The key to success will lie in continuous monitoring and optimization. The AI landscape is constantly changing, with new hardware, software, and algorithms emerging regularly. Organizations need to establish robust monitoring systems to track the performance of their AI workloads and identify areas for improvement.

They also need to be agile and willing to experiment with new technologies and techniques to stay ahead of the curve. This proactive approach will enable them to adapt to the evolving demands of AI and maximize the return on their investments, especially in the realm of hybrid cooling models for increasingly powerful hardware.

Conclusion

The journey to AI success hinges on recognizing that no single solution reigns supreme. The optimal path involves a carefully orchestrated blend of cutting-edge hardware, intelligently designed software, scalable infrastructure, and efficient cooling strategies. Embracing this multifaceted approach allows organizations to unlock the full potential of their AI initiatives, driving innovation, and achieving tangible business outcomes.

Throughout this exploration, we’ve uncovered the nuances of hardware selection, the power of software optimization, the scalability offered by distributed training, and the streamlined management enabled by containerization and orchestration. We’ve also considered the strategic choices surrounding cloud, on-premise, and hybrid infrastructure models, not forgetting the critical role of cooling technologies, including hybrid cooling models, in maintaining peak performance. Each element plays a vital role in the overall efficiency and effectiveness of AI workloads.

As you embark on your own AI optimization journey, remember that experimentation and adaptation are key. The landscape is constantly evolving, with new technologies and techniques emerging regularly.

By embracing a holistic approach, continuously monitoring performance, and remaining open to new possibilities, you can unlock unprecedented levels of performance, efficiency, and scalability, paving the way for lasting success in the age of AI. Don’t hesitate to explore the resources available and seek expert guidance to tailor your approach to your specific needs and goals.

Frequently Asked Questions

What are hybrid cooling models and how do they differ from traditional cooling methods?

Hybrid cooling models represent a synergy of multiple cooling technologies, often combining the strengths of different methods to achieve optimal efficiency. Unlike traditional cooling, which might rely solely on air conditioning or chilled water, hybrid approaches strategically integrate techniques like direct liquid cooling with conventional air cooling.

This allows for targeted cooling where heat density is highest while maintaining cost-effectiveness in less demanding areas, resulting in a more balanced thermal management solution.

What are the primary benefits of using hybrid cooling models in data centers or other applications?

The adoption of hybrid cooling models offers several key advantages, especially in demanding environments like data centers. Improved energy efficiency stands out as a major benefit, translating to reduced operational expenses and a smaller environmental footprint.

Enhanced cooling capacity allows for support of higher-density computing environments, and the targeted nature of these solutions can lead to improved equipment lifespan and reliability through better temperature control and reduced thermal stress.

What are the different types of hybrid cooling systems (e.g., combining liquid and air cooling)?

Diverse types of hybrid cooling systems exist, reflecting the adaptability of the concept. One common configuration pairs direct liquid cooling, such as cold plates attached to processors, with traditional air cooling for other components.

Another might combine free cooling, utilizing outside air when ambient temperatures are suitable, with chiller-based cooling for periods of high heat load or warmer weather. These integrated designs strive to maximize efficiency by selecting the most appropriate method based on specific needs and conditions.

What factors should be considered when selecting a hybrid cooling model for a specific application?

Choosing the right hybrid cooling model requires careful consideration of several factors. Heat load distribution within the environment is crucial, as is the local climate and availability of resources like water.

Facility infrastructure limitations, such as space and power capacity, must also be taken into account. Furthermore, long-term expansion plans and the evolving needs of the application play a role in determining the most suitable hybrid cooling strategy.

How does the initial cost and long-term operational cost of hybrid cooling compare to other cooling solutions?

The initial investment for hybrid cooling systems can often be higher than simpler, traditional methods due to the complexity of integrating multiple technologies. However, the long-term operational costs tend to be lower because of the improved energy efficiency they provide.

Over time, the reduced energy consumption and potential for prolonged equipment lifespan can offset the initial capital expenditure, making hybrid cooling a cost-effective choice in the long run, particularly for high-density applications.