The AI Imperative

Fast deployment is no longer a luxury, it’s a necessity in today’s competitive AI landscape. Businesses are racing to implement AI solutions, and those who hesitate risk falling behind. From personalized customer experiences to optimized supply chains and groundbreaking research, AI is transforming every industry. The companies that can develop, train, and deploy AI models quickly will gain a significant competitive edge, leaving slower, more traditional organizations struggling to catch up. The time to act is now.

The demand for AI applications is accelerating at an unprecedented rate. Industries ranging from healthcare and finance to manufacturing and retail are seeking to leverage the power of AI to improve efficiency, drive innovation, and gain a deeper understanding of their customers.

This surge in demand has created a highly competitive environment, where companies are vying for talent, resources, and market share. Those who can rapidly deploy AI solutions will be best positioned to capture these opportunities and establish themselves as leaders in their respective fields.

However, the path to AI success is not without its challenges. Many organizations are finding that their existing IT infrastructure is simply not up to the task of supporting the demanding requirements of modern AI workloads. Traditional datacenter build-outs can take months, even years, to complete, creating a significant bottleneck in the AI development lifecycle.

These delays can result in missed market opportunities, lost revenue, and a general sense of frustration among AI teams. It’s a costly equation that demands a faster, more agile approach.

The Traditional Datacenter Build

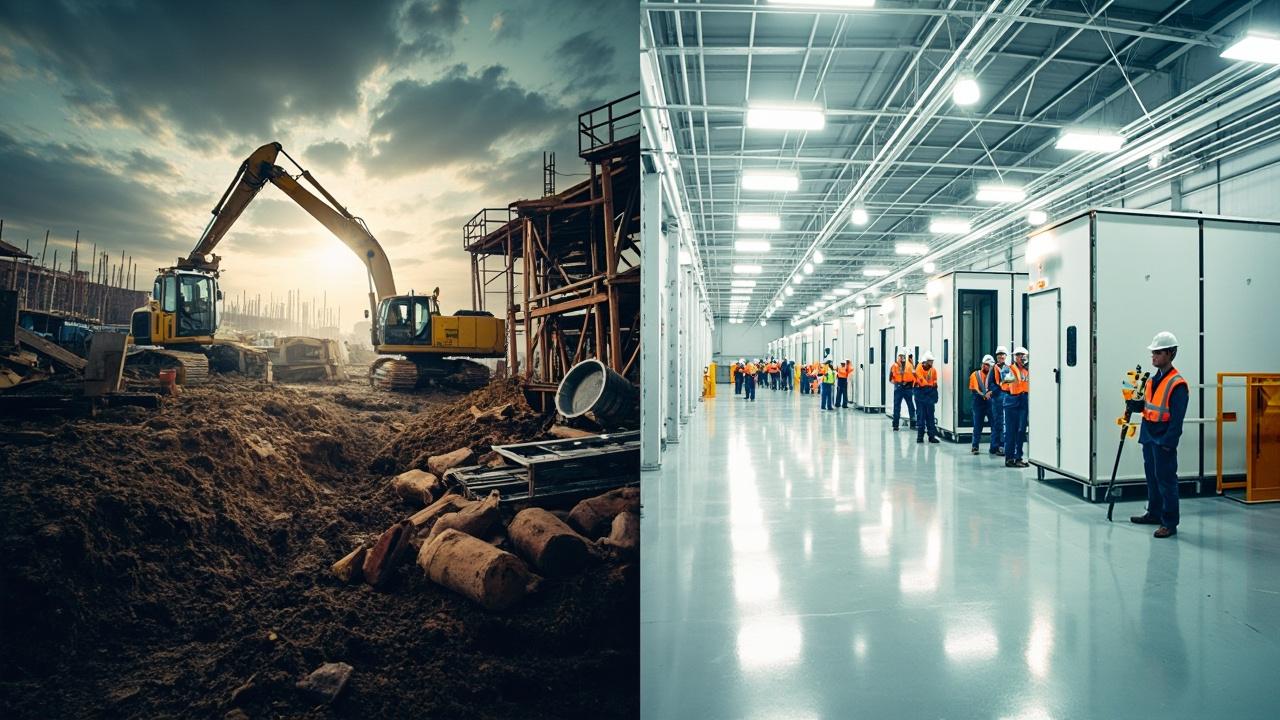

Building a traditional datacenter from the ground up is a monumental undertaking, and it’s crucial to understand just how lengthy and complex the process can be. We’re not talking about weeks or months; we’re often looking at a two-year commitment, a significant time sink that can cripple your AI ambitions. This timeline isn’t just an estimate; it’s the reality for many organizations embarking on this path.

The process starts with site selection, a phase that can be surprisingly protracted. Finding the right location involves numerous considerations, including proximity to power grids, access to fiber optic networks, and local zoning regulations. Once a site is identified, securing the necessary permits can take months, if not longer, depending on the jurisdiction and environmental impact assessments.

Then comes the actual construction. Pouring concrete, erecting walls, and installing the core infrastructure-power, cooling, and networking-are all time-intensive activities. Power and cooling infrastructure are especially critical for AI workloads, which demand far more energy than typical IT applications.

This often requires working with utility companies to upgrade power grids and install specialized cooling systems, adding further delays. Securing all the proper equipment needed is another timely process. Something like a transformer, for example, may have a several month lead time to get delivered to the site.

To illustrate the potential consequences, consider a hypothetical scenario: a financial services company aiming to deploy an AI-powered fraud detection system. They begin planning a new datacenter to house their AI cluster, anticipating a two-year build time. However, unexpected delays arise during the permitting phase due to environmental concerns. This pushes the project back by six months.

Meanwhile, a competitor, leveraging a cloud-based AI platform, launches a similar fraud detection system in just a few months, capturing a significant share of the market. By the time the first company’s datacenter is finally operational, they’ve not only missed a critical market window, but also lost valuable revenue and competitive ground. These delays are simply unacceptable in the age of AI, and underscore the importance of fast deployment solutions.

| Phase | Typical Timeframe |

|---|---|

| Site Selection & Permitting | 6-12 Months |

| Construction | 9-15 Months |

| Power & Cooling Infrastructure | 6-12 Months |

| Networking & Security | 3-6 Months |

The AI Cluster’s Unique Needs

AI/ML workloads aren’t your grandfather’s spreadsheet applications. They demand a fundamentally different infrastructure paradigm compared to traditional computing. Think of it this way: a standard server is like a fuel-efficient sedan, perfectly adequate for everyday tasks. An AI cluster, however, is a Formula 1 race car; it needs a specialized engine, tires, and aerodynamics to perform optimally. This translates into specific hardware and software requirements that legacy infrastructures simply can’t handle efficiently.

One of the most critical differences lies in the demand for high-density computing. AI models, especially deep learning models, require massive parallel processing power. This necessitates the use of specialized hardware like Graphics Processing Units (GPUs) or Tensor Processing Units (TPUs), which are designed to accelerate matrix multiplications and other operations essential for AI training and inference.

Furthermore, these specialized processors need to be interconnected via high-bandwidth networking to avoid bottlenecks during data transfer. Consider these points:

- High-Density Computing: GPUs and TPUs for parallel processing

- Specialized Hardware: Optimized for AI/ML algorithms

- High-Bandwidth Networking: Fast data transfer between nodes

Legacy infrastructure often lacks the power, cooling, and networking capabilities to support these high-density configurations. Trying to force-fit an AI cluster into a traditional data center can result in performance bottlenecks, increased latency, and ultimately, a slower and less effective AI implementation. It is critical to consider the need for fast deployment so the project can be tested and iterated more rapidly.

Moreover, the sheer volume of data used in AI/ML training requires massive storage capacity, often exceeding the capabilities of traditional storage systems. This can involve anything from object stores holding raw data to high-performance file systems used for model training. All this data then requires a new approach to security.

The Cost of Delay

The true expense of postponing your AI initiatives extends far beyond the initially allocated infrastructure budget. While cost overruns on datacenter construction are certainly painful, the *hidden* costs associated with delayed AI deployments can be crippling to an organization.

These costs manifest in various ways, eroding potential profits and hindering overall growth. Imagine a competitor launching an AI-powered product six months before you – the head start they gain in market share and customer acquisition can be difficult, if not impossible, to overcome.

Consider these factors when assessing the true cost of delay:

- Lost Revenue: Every month your AI solution is delayed is a month of potential revenue lost. This could be from new products, improved services, or optimized processes that AI would enable.

- Missed Market Opportunities: The AI landscape is rapidly evolving. Delaying your deployment could mean missing out on emerging market trends and opportunities that competitors are quick to seize.

- Delayed Product Launches: AI is often a critical component of new product development. Delays in AI infrastructure directly translate to delays in product launches, giving competitors a significant advantage.

To illustrate the financial impact, consider a simple ROI calculation. Suppose a company projects that an AI-powered recommendation engine will generate an additional $1 million in annual revenue. If the deployment is delayed by six months, that’s a loss of $500,000 in potential revenue. This doesn’t even account for the compounding effect of lost market share and the potential for competitors to establish a stronger foothold.

Furthermore, consider the opportunity cost of your IT team’s time. Instead of focusing on core AI development and model training, they are bogged down with the complexities of slow infrastructure deployment. This not only delays AI initiatives but also diminishes the productivity and morale of valuable team members. With fast deployment strategies, your IT staff can focus on what matters: getting AI models into production and driving business value.

Agile Infrastructure

What Is Agile Infrastructure?

Agile infrastructure encompasses a range of innovative solutions designed to accelerate AI deployments. This includes pre-fabricated data centers, which are built in a factory and shipped to the desired location for quick assembly. Modular data centers offer a similar approach, providing scalable building blocks that can be easily added or removed as needed.

Cloud-based AI platforms represent another form of agile infrastructure, offering on-demand access to computing resources, storage, and specialized AI tools. Each of these options provides ways to drastically reduce the time from project inception to initial AI training.

Benefits of the Agile Approach

The primary advantage of agile infrastructure is the dramatically reduced deployment time. Instead of waiting years for a traditional datacenter to be built, organizations can have a fully functional AI cluster up and running in a matter of weeks or months. This speed enables faster experimentation, quicker iteration on AI models, and a faster time to market for AI-powered products and services. Beyond speed, agile infrastructure offers greater flexibility and scalability.

Organizations can easily adjust their computing resources to meet changing demands, scaling up or down as needed. This eliminates the risk of over-provisioning or under-provisioning infrastructure, ensuring optimal resource utilization. Because the upfront investment is much lower than building a new datacenter, it allows organizations to start small and scale as their AI initiatives grow, reducing financial risk.

On-Premise vs. Cloud-Based Solutions

Selecting the right agile infrastructure solution depends on an organization’s specific needs and priorities. On-premise modular data centers offer greater control over data security and compliance, while cloud-based solutions provide unparalleled scalability and ease of management. Hybrid approaches, combining on-premise and cloud resources, can also be a viable option. Regardless of the chosen approach, agile infrastructure empowers organizations to accelerate their AI initiatives and gain a competitive advantage through *fast deployment*.

Fast Deployment

Showcase real-world examples of companies that have successfully implemented AI solutions using agile infrastructure and achieved significant business outcomes.

Quantify the time savings achieved by using alternative deployment methods.

Emphasize the importance of a rapid development cycle for AI/ML models.

In today’s fiercely competitive landscape, companies that can rapidly deploy and iterate on AI solutions gain a significant edge. This agility translates directly into faster innovation, quicker market entry, and ultimately, increased profitability. Organizations that are able to harness the power of their data and translate this to AI solutions with speed will have the upper hand in the marketplace.

Real-World Success Stories

Consider Company X, a leading financial services firm that was struggling to keep pace with fraud detection. By leveraging a pre-fabricated data center solution, they were able to deploy a sophisticated AI-powered fraud detection system in just four months, a process that would have taken over a year with a traditional build-out. This allowed them to dramatically reduce fraudulent transactions, saving millions of dollars annually and improving customer satisfaction.

Similarly, Company Y, a cutting-edge pharmaceutical company, needed a high-performance computing cluster to accelerate its drug discovery process. By opting for a cloud-based AI platform, they eliminated the need for significant upfront capital investment and were able to begin training complex AI models within weeks, significantly shortening the time it takes to bring life-saving drugs to market.

These are not isolated incidents; they are representative of a growing trend where companies are realizing the benefits of agile infrastructure.

Quantifying the Time Advantage

The time savings achieved through agile infrastructure are not just marginal; they are often transformative. Instead of waiting upwards of two years for a traditional datacenter to come online, organizations can deploy AI clusters in a matter of weeks or months.

This dramatic reduction in deployment time translates directly into a faster return on investment (ROI) and a greater ability to adapt to changing market conditions. The ability to respond quickly to market opportunities and customer needs is crucial for maintaining a competitive edge.

The Need for Speed in AI/ML Model Development

The AI/ML development lifecycle is inherently iterative, requiring constant experimentation and refinement. The ability to rapidly deploy and test new models is essential for achieving optimal performance and accuracy. A slow deployment cycle can stifle innovation and prevent data scientists from effectively iterating on their models.

Embracing agile infrastructure solutions allows organizations to shorten the feedback loop, accelerating the development of AI/ML models and enabling them to deliver more impactful solutions to the business. This requires a commitment to fast deployment and a willingness to embrace new technologies and approaches.

Choosing the Right Solution

Selecting the optimal agile infrastructure for your AI initiatives isn’t a one-size-fits-all endeavor. It demands careful consideration of your specific AI workload demands, budgetary limitations, and overarching business objectives. A crucial first step involves a thorough assessment of your AI/ML models.

Understanding the computational intensity, data storage needs, and networking requirements of your workloads will guide you towards the appropriate infrastructure type. For instance, deep learning models often necessitate specialized hardware like GPUs or TPUs, influencing your choice between cloud-based GPU instances or on-premise solutions with dedicated GPU servers.

Furthermore, the scale of your data sets plays a significant role; massive data volumes might necessitate high-bandwidth, low-latency storage solutions that could be more cost-effectively managed on-premise or through specialized cloud storage services.

Budgetary constraints are, of course, a primary consideration. Cloud-based solutions typically offer a pay-as-you-go model, which can be attractive for organizations with fluctuating AI workloads or limited upfront capital. However, for sustained, high-intensity AI training, the long-term costs of cloud-based resources might exceed those of an on-premise deployment.

Modular data centers present a middle ground, offering a balance between capital expenditure and operational flexibility. These pre-fabricated, scalable solutions can be deployed more rapidly than traditional datacenters, allowing for faster experimentation and iteration. Ultimately, a detailed cost-benefit analysis, factoring in hardware costs, power consumption, cooling requirements, and IT staffing expenses, is essential to determine the most economically viable option.

Beyond technical and financial considerations, security and compliance requirements cannot be overlooked. Depending on the nature of your data and the industry you operate in, you might be subject to strict regulations regarding data privacy, security, and sovereignty. Cloud providers often offer robust security features and compliance certifications, but it’s crucial to verify that their offerings align with your specific requirements.

On-premise solutions offer greater control over data security, but also necessitate a higher level of internal expertise and investment in security infrastructure. A hybrid approach, combining the flexibility of the cloud with the security of an on-premise environment, can be an effective strategy for organizations with stringent compliance needs while facilitating fast deployment.

| Factor | Considerations |

|---|---|

| AI Workload | Computational intensity, data storage needs, networking requirements, specialized hardware (GPUs, TPUs) |

| Budget | Upfront capital, operational expenses, cloud costs, on-premise hardware costs, IT staffing |

| Security & Compliance | Data privacy regulations, industry-specific compliance requirements, data sovereignty |

Future-Proofing Your AI Infrastructure

The landscape of AI is in constant flux, with new models, algorithms, and hardware emerging at a breakneck pace. Sticking with rigid, traditional infrastructure approaches is akin to building a house with concrete before knowing the blueprint – you’re setting yourself up for costly and time-consuming renovations down the line.

Future-proofing your AI infrastructure means building for adaptability, ensuring you can seamlessly integrate new technologies and scale your resources as your AI initiatives evolve. This is where investing in agility becomes paramount.

A key aspect of future-proofing is to choose solutions that offer seamless integration with emerging technologies. Whether it’s the latest generation of GPUs, advancements in networking fabrics, or new storage paradigms, your infrastructure should be designed to accommodate these innovations without requiring a complete overhaul.

This requires a strategic approach, prioritizing modularity and interoperability over proprietary, locked-in systems. Embracing open standards and APIs will allow you to mix and match components from different vendors, creating a flexible and resilient AI ecosystem.

Furthermore, successful agility relies heavily on building strong vendor partnerships is another critical element. Seek out providers who are not just selling products, but offering ongoing support, expertise, and a commitment to innovation. A good vendor will act as a strategic advisor, helping you navigate the complexities of AI infrastructure and ensuring that your solutions remain cutting-edge.

This includes access to training, best practices, and early access to new technologies. Ultimately, embracing agile infrastructure combined with smart partnerships allows for fast deployment and sustained competitive advantage in the ever-evolving AI space.

Case Study

One company, a leading provider of personalized healthcare solutions, faced a common dilemma: their ambitious AI roadmap was being throttled by the glacial pace of traditional infrastructure procurement. They aimed to leverage AI to analyze patient data, predict health risks, and personalize treatment plans. However, their existing infrastructure was inadequate to handle the intense computational demands of AI/ML workloads.

The prospect of building a new datacenter loomed, threatening to delay their AI initiatives by two years or more. Recognizing the immense competitive pressure in the healthcare sector, they knew they couldn’t afford to wait.

Instead of embarking on a lengthy and costly datacenter construction project, they opted for a modular, pre-fabricated data center solution. This approach allowed them to bypass the lengthy processes of site selection, permitting, and traditional construction. The modular data center was pre-engineered and built off-site, then rapidly deployed and integrated with their existing IT environment. This dramatically reduced the time to deployment compared to traditional methods.

The key to their success was a combination of careful planning, strategic partnerships, and a relentless focus on speed. They collaborated closely with a vendor specializing in modular data centers, ensuring that the solution was tailored to their specific AI workload requirements. They also implemented a robust project management framework to track progress, identify potential roadblocks, and ensure that the project stayed on schedule. This enabled unbelievably fast deployment, and they were able to accelerate their timelines by months.

Conclusion

The message is clear: the time for glacial infrastructure deployments is over. The AI revolution is here, and those who cling to outdated methodologies will be left behind. We’ve seen how the traditional datacenter build process can stretch out for years, draining resources and squandering opportunities while competitors surge ahead. The unique demands of AI/ML workloads only exacerbate these challenges, rendering legacy infrastructure increasingly obsolete.

By embracing agile infrastructure solutions, you can break free from these constraints and unlock the true potential of AI. Whether it’s through pre-fabricated data centers, modular designs, or cloud-based platforms, the key is to prioritize speed, flexibility, and scalability.

The case study highlighted demonstrates a rapid setup is achievable, proving that you can train AI within a few months, rather than years. The advantage of fast deployment means that the IT department is a facilitator for faster model creation, faster feedback from the business, which translates to higher revenue with more innovative products.

The question now isn’t whether you can afford to adopt agile infrastructure, but rather, can you afford not to? Are you prepared to risk missed market opportunities, delayed product launches, and the frustration of your talented AI team? The future belongs to those who can rapidly iterate and adapt, turning innovative ideas into tangible AI-powered solutions. What steps will you take today to accelerate your AI journey and secure your place in the future?

Frequently Asked Questions

What are the key benefits of fast deployment?

The primary advantages of rapid deployment revolve around enhanced agility and responsiveness. Swiftly launching new features or updates allows businesses to quickly adapt to changing market demands and customer feedback.

This accelerated delivery cycle also empowers organizations to gain a competitive edge by being first to market with innovative solutions. Furthermore, faster deployment contributes to reduced time-to-value, enabling faster realization of the benefits from projects.

What tools and technologies facilitate fast deployment?

A range of tools and technologies enable faster deployment. Containerization technologies like Docker and Kubernetes are essential for packaging and orchestrating applications.

Continuous Integration and Continuous Delivery (CI/CD) pipelines, facilitated by tools such as Jenkins or GitLab CI, automate the build, test, and deployment processes. Cloud platforms, such as AWS, Azure, and Google Cloud, provide scalable and readily available infrastructure resources.

How does infrastructure-as-code (IaC) contribute to faster deployments?

Infrastructure-as-code significantly speeds up deployments by automating infrastructure provisioning. Rather than manually configuring servers, networks, and storage, IaC allows defining infrastructure through code. Tools like Terraform and CloudFormation can then automatically provision and manage these resources, ensuring consistency and repeatability across environments. This drastically reduces the time and effort required to set up deployment environments.

What are the challenges of implementing fast deployment practices?

Implementing fast deployment practices can present several challenges. One hurdle is managing the increased complexity that arises from frequent releases. Ensuring application stability and reliability requires robust testing and monitoring strategies. Another challenge is organizational resistance to change, requiring a cultural shift towards automation and collaboration. Security concerns also need careful consideration, ensuring the deployment process doesn’t introduce vulnerabilities.

How can I measure the success of our fast deployment strategy?

The success of a fast deployment strategy can be measured through several key metrics. Deployment frequency, reflecting how often releases occur, is a primary indicator. Lead time, the time it takes to go from code commit to production deployment, should be reduced.

Mean Time To Recovery (MTTR), measuring how quickly issues are resolved, indicates system resilience. Monitoring customer satisfaction and business outcomes provides insight into the overall impact.