The Squeeze Is On

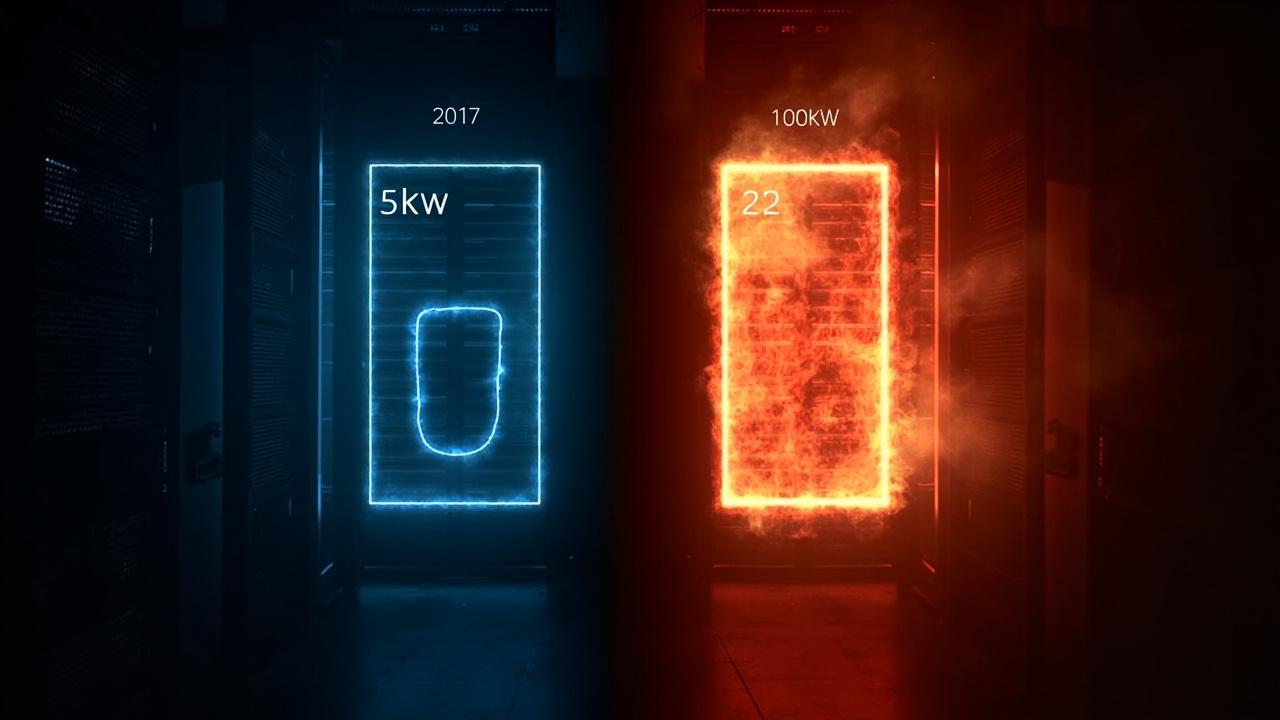

Imagine packing the processing power of an entire turn-of-the-millennium server farm into a modern-day server rack. That’s the condensed reality of today’s IT landscape, where the demand for power density, specifically kilowatts per square foot (kW/sq ft), is skyrocketing. This metric has become a critical key performance indicator (KPI) in data centers and high-performance computing environments.

Kilowatts per square foot (kW/sq ft) represents the amount of electrical power a given area of a data center can support. As computing needs surge, so does the concentration of equipment, leading to a higher kW/sq ft requirement. This surge is not arbitrary, various factors are driving this change.

These include the increasing prevalence of artificial intelligence (AI), the ever-expanding realm of cloud computing, the rise of edge computing, and continuous hardware shrinkage. Each factor contributes uniquely to the growing need for more efficient and densely packed computing infrastructure.

Optimizing kW/sq ft offers a multitude of benefits. Facilities can achieve heightened efficiency, reduced operational costs, and superior space utilization by increasing their power density. Subsequent sections will delve into each of these factors, illuminating the challenges and opportunities they present for data center professionals, IT managers, real estate developers, technology investors, and anyone interested in the future of computing infrastructure.

The AI Accelerator

The computational demands of artificial intelligence are reshaping the landscape of data centers and high-performance computing environments. AI workloads, particularly those involving machine learning, deep learning, and neural networks, are incredibly resource-intensive. These tasks require massive parallel processing capabilities to train complex models and perform real-time inference. Consequently, there’s a significant increase in the required kilowatts per square foot to accommodate the hardware needed to fulfill these computational needs.

The driving force behind this demand is the specialized hardware required for AI. While CPUs can handle some AI tasks, GPUs (Graphics Processing Units) and ASICs (Application-Specific Integrated Circuits) are far more efficient at accelerating AI algorithms. GPUs, originally designed for rendering graphics, excel at performing the matrix multiplications that are fundamental to deep learning.

ASICs, on the other hand, are custom-designed chips optimized for specific AI tasks, offering even greater performance and energy efficiency. However, these powerful processors consume a significant amount of power in a relatively small space. As AI algorithms become more complex and the datasets they process grow larger, the need for even more powerful and power-hungry hardware will continue to escalate the need for increased power density.

Consider these examples of AI applications and their impact:

Furthermore, the trend of AI chips becoming smaller and more powerful only intensifies the challenges. As manufacturers pack more transistors into smaller areas, the power density increases, leading to higher heat generation. Effective cooling solutions are critical to prevent overheating and ensure the reliable operation of these high-density AI systems. The advancements in AI technology directly correlate with a continued rise in the demand for kilowatts per square foot in data centers and edge computing environments.

Cloud Computing’s Insatiable Appetite

The relentless expansion of cloud services, encompassing Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS), has created an unprecedented demand for data centers with enhanced kW/sq ft capabilities. To effectively deliver these services to a global customer base, cloud providers require massive computing infrastructure. This, in turn, drives the need for data centers capable of hosting and operating extremely dense server racks.

The more computing power you can pack into a single square foot, the more cost-effective it becomes to deliver cloud services at scale. This scaling imperative is a fundamental driver of the increasing importance of kilowatts per square foot as a key metric in the cloud computing world.

Hyperscale Challenges

Managing and cooling these dense server racks in hyperscale cloud environments presents significant challenges. Traditional air-cooling methods often struggle to effectively dissipate the heat generated by densely packed servers. This can lead to performance degradation, equipment failure, and increased energy consumption.

Hyperscale data centers must therefore invest in advanced cooling technologies, such as liquid cooling, to maintain optimal operating temperatures and ensure the reliability of their infrastructure. Furthermore, the sheer scale of hyperscale environments necessitates sophisticated management and monitoring systems to ensure efficient resource utilization and minimize downtime.

Virtualization and Sustainability

Virtualization and containerization technologies play a crucial role in maximizing server utilization in cloud environments, which consequently drives up kW/sq ft. By enabling multiple virtual machines or containers to run on a single physical server, these technologies allow cloud providers to pack more computing power into a smaller space. This increased utilization, however, also leads to higher power consumption and heat generation.

In addition, cloud providers are increasingly focused on sustainability goals, which require them to design and operate their data centers in an environmentally responsible manner.

This includes adopting energy-efficient cooling solutions, utilizing renewable energy sources, and minimizing waste. The need to balance high power density with sustainability considerations is a major challenge for cloud providers.

Edge Computing’s Distributed Power

Edge computing represents a significant shift in how data is processed and delivered, moving computational resources closer to the end-user. This proximity drastically reduces latency, leading to faster response times and improved performance for a variety of applications. Think of self-driving cars needing real-time analysis of sensor data, or augmented reality applications that overlay digital information onto the physical world.

These applications demand immediate processing that cloud computing, with its inherent latency, simply cannot provide. Edge computing solves this by distributing smaller data centers and compute resources across a wider geographical area, enabling localized data processing and reduced network congestion.

The Challenges of Distributed Density

Deploying high-density computing at the edge presents unique challenges. Unlike traditional data centers, edge locations often have limited space, power, and cooling infrastructure. Imagine trying to fit a powerful server rack into a cell tower base station or a retail store’s back room.

Space is often at a premium, and the existing power grid may not be able to support the high power density required by modern computing hardware. Furthermore, these locations may lack the sophisticated cooling systems found in larger data centers, making it difficult to dissipate the heat generated by dense server deployments. This necessitates innovative solutions that prioritize efficiency and minimize environmental impact.

Diverse Edge Environments and Their Needs

The kW/sq ft requirements for edge computing vary widely depending on the specific environment and application. A micro data center located in a remote industrial facility, for example, might require a higher kW/sq ft than a server installed in a retail location for processing local transactions. Similarly, cell towers supporting 5G networks need to handle increasing data throughput, demanding more powerful and energy-efficient hardware.

These variations emphasize the need for tailored solutions that meet the specific needs of each edge deployment. This often means considering factors like environmental conditions, available space, power constraints, and the type of applications being supported. The ability to adapt and optimize infrastructure for these diverse scenarios is crucial for successful edge deployments.

Hardware Shrinkage

For decades, Moore’s Law has been a guiding principle in the semiconductor industry, predicting the doubling of transistors on a microchip approximately every two years. This relentless pursuit of miniaturization has led to remarkable advancements in computing power and efficiency. As transistors shrink, more of them can be packed into the same physical space, resulting in significantly increased transistor density.

This allows for more complex and powerful processors, memory chips, and other integrated circuits. However, this increased density comes with a trade-off: increased heat generation within smaller areas. Managing this heat effectively becomes a critical challenge in high-density computing environments.

The implications of hardware shrinkage extend beyond just the processor itself. Memory chips, GPUs, and even networking components are all subject to the same drive for miniaturization. This trend is pushing the limits of traditional cooling methods and necessitating the development of innovative thermal management solutions. Consider the following factors that contribute to the challenge:

- Increased Transistor Density: More transistors in a smaller area generate more heat.

- Higher Clock Speeds: Faster processing speeds lead to increased power consumption and heat generation.

- Complex Architectures: Modern chips feature increasingly complex architectures with multiple cores and specialized processing units, all contributing to heat output.

Beyond Moore’s Law, new materials and manufacturing techniques are emerging that enable even higher density. 3D stacking, for example, allows manufacturers to vertically stack multiple layers of chips, further increasing transistor density in a given footprint. This approach offers significant performance gains but also presents new challenges in terms of heat dissipation.

The ability to effectively manage the heat generated by these densely packed components is paramount to ensuring the reliability and performance of modern computing systems. The rise in power density requires innovative thermal solutions.

Cooling the Beast

As data centers pack more computing power into smaller spaces, the challenge of managing heat becomes paramount. Traditional air cooling methods, while still viable in some scenarios, often struggle to keep pace with the thermal output of high-density racks. This has spurred innovation in cooling technologies, pushing the boundaries of what’s possible in thermal management. The future of efficient, high-density data centers hinges on effectively addressing this cooling challenge.

Liquid cooling solutions are gaining significant traction as a means to directly tackle the heat at its source. Direct-to-chip cooling involves attaching cold plates directly to heat-generating components like CPUs and GPUs, circulating liquid to draw heat away.

This method offers superior heat transfer compared to air cooling, enabling higher power densities and improved overall efficiency. Immersion cooling takes this concept a step further by submerging entire servers in a dielectric fluid, providing even more effective heat dissipation.

The fluid absorbs the heat generated by the components and is then circulated through a heat exchanger to release the heat. This approach can significantly reduce energy consumption associated with cooling, leading to substantial cost savings and a reduced carbon footprint. The effectiveness of these methods directly correlates with the rack’s power density.

Rear-door heat exchangers (RDHx) offer a less intrusive approach to enhancing cooling capacity. These units are mounted on the back of server racks and use a liquid-to-air heat exchanger to remove heat from the exhaust air. RDHxs can be retrofitted into existing data centers, making them a practical option for upgrading cooling infrastructure without requiring extensive modifications.

Furthermore, advancements in coolant types, such as nanofluids with enhanced thermal conductivity, are continually improving the performance of liquid cooling systems. By precisely targeting the heat source and employing efficient heat transfer mechanisms, these innovative cooling solutions are paving the way for sustainable high-density computing.

| Cooling Technology | Description | Benefits |

|---|---|---|

| Direct-to-Chip Liquid Cooling | Cold plates attached to CPUs and GPUs, circulating liquid to remove heat. | Superior heat transfer, enables higher power densities, improved efficiency. |

| Immersion Cooling | Servers submerged in dielectric fluid for maximum heat dissipation. | Significant energy reduction, lower carbon footprint, very high density support. |

| Rear-Door Heat Exchangers | Liquid-to-air heat exchanger on the back of server racks to remove exhaust heat. | Retrofit option, relatively easy to implement, enhances existing cooling. |

Real Estate Implications

Data center design and real estate selection are now inextricably linked to kilowatt per square foot requirements. The higher the kW/sq ft demand, the more crucial it becomes to carefully evaluate power availability, cooling infrastructure, and physical space limitations. These factors directly impact the overall cost and viability of high-density deployments.

Real estate developers and data center operators must collaborate to find locations with sufficient power capacity and the potential for efficient cooling solutions. Retrofitting existing buildings for data center use presents unique challenges, often requiring significant infrastructure upgrades to meet the demands of modern, high-density computing.

The financial implications are considerable. Securing sufficient power and implementing advanced cooling systems can represent a substantial portion of the total project cost. Failing to adequately address these factors can lead to operational inefficiencies, increased energy consumption, and ultimately, higher operating expenses.

Therefore, a thorough assessment of real estate options, considering their ability to support high power density, is paramount. This assessment should include evaluating the local power grid’s capacity, the availability of renewable energy sources, and the feasibility of implementing innovative cooling technologies. The success of a data center hinges on the ability to effectively manage and dissipate the heat generated by high-density equipment.

Innovative data center designs are emerging to optimize space utilization and cooling efficiency. Modular data centers, for instance, offer a flexible and scalable approach to high-density deployments. These prefabricated units can be quickly deployed and configured to meet specific power and cooling requirements. Furthermore, designs that incorporate vertical stacking and optimized airflow can maximize the use of available space.

The increasing emphasis on sustainability is also driving innovation in data center design. Techniques such as waste heat reuse and natural cooling methods are becoming increasingly important for reducing the environmental impact of high-density computing. Efficiently managing the `power density` and heat output is no longer just an operational consideration but a critical factor in long-term sustainability.

| Factor | Impact on Real Estate |

|---|---|

| Power Availability | Dictates location feasibility and infrastructure costs. |

| Cooling Infrastructure | Influences design choices and ongoing operational expenses. |

| Physical Space Constraints | Drives innovative designs for space optimization. |

Looking Ahead

The relentless march toward higher kilowatts per square foot is not merely a technical challenge; it’s a signal of a fundamental shift in how we conceive, construct, and operate computing infrastructure. As we look to the future, the trends outlined here-the insatiable demands of AI and cloud computing, the distributed nature of edge deployments, and the ever-shrinking size of hardware-will only intensify.

The data center industry, real estate developers, and technology innovators must collaborate to develop solutions that are not only efficient and cost-effective, but also environmentally responsible.

The rise of quantum computing and neuromorphic architectures, while still in their nascent stages, promises to further redefine the boundaries of power density. These technologies, with their unique processing paradigms, could potentially pack even more computational horsepower into smaller spaces, demanding entirely new approaches to cooling and power management. Moreover, as sustainability becomes an increasingly critical concern, the industry must prioritize energy efficiency and explore renewable energy sources to mitigate the environmental impact of high-density computing.

In conclusion, the kilowatt per square foot metric encapsulates the essence of modern computing’s trajectory: more power, more performance, in less space. Ignoring this trend is akin to ignoring the tide. Embracing innovation in cooling, power distribution, and facility design is not just about staying competitive; it’s about building a sustainable and scalable foundation for the future of technology. The industry must proactively explore and implement high-density solutions to unlock the full potential of future technologies.

Frequently Asked Questions

What is power density and how is it calculated?

Power density refers to the amount of power that is concentrated within a given volume or area. It essentially quantifies how much power is packed into a particular space. To calculate power density, you typically divide the power by the volume or area, depending on whether you’re dealing with a three-dimensional or two-dimensional object.

Why is power density an important factor in electronics and engineering design?

Power density is a crucial consideration in electronics and engineering because it directly impacts the size, performance, and thermal management of devices. High power density can lead to smaller, more efficient devices, but it also presents significant challenges in terms of heat dissipation and component reliability. Engineers must carefully manage power density to ensure optimal performance and longevity.

What are the units of measurement for power density?

The units of measurement for power density depend on whether you are considering power per unit area or power per unit volume. Power per unit area is typically expressed in watts per square meter (W/m²) or watts per square centimeter (W/cm²).

For power per unit volume, the unit is usually watts per cubic meter (W/m³) or watts per cubic centimeter (W/cm³).

How does power density affect the heat generation and dissipation in electronic components?

Power density directly correlates with heat generation in electronic components. A higher power density means more power is being dissipated as heat within a smaller area or volume.

This increased heat generation necessitates effective heat dissipation methods, such as heat sinks, fans, or liquid cooling, to prevent components from overheating and failing. Proper thermal management is critical to maintaining performance and reliability.

What are some common methods for increasing power density in electronic devices?

Several methods exist for increasing power density in electronic devices. These include using advanced materials with better thermal conductivity, optimizing component layout to minimize heat concentration, and employing innovative cooling techniques like microchannel heat exchangers or vapor chambers.

Furthermore, advancements in semiconductor technology, such as wide bandgap materials like GaN and SiC, enable devices to operate at higher power levels within the same physical footprint.