The Monster in the Rack

Unleashing 150kW in a single server rack sounds like something from a science fiction film, but it’s the reality of modern, high density cooling demands. Imagine the raw power, the potential for chaos, and the sheer engineering challenge of containing that much energy in such a confined space. This is the world of extreme density computing, where the relentless pursuit of greater processing power leads to unprecedented heat generation and infrastructure demands.

The rise of artificial intelligence, high-performance computing, and massive data analytics has fueled the need for ever-denser computing environments. Packing more processing power into each rack allows us to achieve greater performance with a smaller footprint, but it also introduces a unique set of challenges. Managing the heat, delivering sufficient power, optimizing limited space, and navigating the operational complexities of a 150kW rack require a fundamentally different approach to data center design and management.

This blog post aims to demystify the process of taming such a powerful beast. We’ll share our approach to tackling the unique challenges of a 150kW rack, from infrastructure considerations to innovative cooling solutions. The key hurdles we’ll address include the immense heat load, ensuring a stable and reliable power supply, overcoming space limitations within the rack, and maintaining overall operational efficiency in the face of such complexity.

Understanding the Heat Equation

When you start packing 150kW of computing power into a single server rack, you’re not just dealing with electricity; you’re grappling with an immense amount of heat. The relationship between power consumption and heat generation isn’t linear-it’s exponential. As power consumption increases, the heat generated skyrockets. Imagine the heat output of one hundred and fifty electric heaters crammed into a space barely larger than a refrigerator. That’s the reality we’re facing.

To put this into perspective, 150kW translates to roughly 512,000 BTUs (British Thermal Units) per hour. In a traditional data center environment, this level of heat would be catastrophic. Standard cooling systems, designed for much lower densities, simply cannot cope. They would be overwhelmed, leading to a rapid increase in ambient temperature and creating a thermal bottleneck that chokes performance and threatens hardware integrity. The implications extend beyond just discomfort; it’s about operational viability.

The risks of inadequate cooling in a high-density environment are severe. First and foremost, thermal throttling becomes a major concern. To prevent catastrophic damage, servers will automatically reduce their processing speeds when they reach critical temperature thresholds, severely impacting performance and negating the benefits of the high-density setup.

More alarmingly, prolonged exposure to excessive heat can lead to premature hardware failure, resulting in costly replacements and significant downtime. Downtime translates directly to lost revenue, damaged reputation, and missed opportunities. That is why *high density cooling* is so important in this new technology.

Powering the Beast

Supporting a 150kW server rack is not simply a matter of plugging it into a wall socket. It demands a complete overhaul of traditional data center infrastructure. Standard power distribution systems are simply not designed to handle such concentrated energy demands.

This requires careful planning and significant investment in upgrading existing electrical systems or designing entirely new, dedicated power feeds. The initial step involves assessing the existing power capacity and determining the necessary upgrades to the circuit breakers, cabling, and transformers. Overlooking any element can be disastrous, resulting in tripped circuits, damaged equipment, or even a fire hazard.

The core of powering such a dense system lies in the Power Distribution Units (PDUs). These are not your average power strips; they are sophisticated devices that must be capable of handling extremely high amperage and voltage while providing precise power monitoring. Intelligent PDUs offer real-time data on power consumption, load balancing, and environmental conditions, enabling proactive management and preventing overloads.

Furthermore, the selection of appropriate cabling is crucial. Standard cables are prone to overheating and voltage drop at high currents. Therefore, specialized, high-gauge cables with superior insulation and heat resistance are essential to ensure safe and efficient power delivery.

Redundancy is another critical aspect of infrastructure design. A single point of failure in the power delivery system can bring the entire rack down, leading to significant downtime and financial losses. Implementing redundant power supplies, backup generators, and uninterruptible power supplies (UPS) is crucial for maintaining uptime. In the event of a power outage, the UPS provides immediate backup power, allowing the generators to kick in and take over the load.

Efficient power conversion is also paramount. Power losses due to inefficient power supplies and distribution can add significantly to operational costs and increase the overall heat load. Investing in energy-efficient power supplies and optimizing power distribution pathways will minimize waste and enhance the overall efficiency of the system, especially when implementing high density cooling solutions such as immersion cooling.

Our Secret Weapon

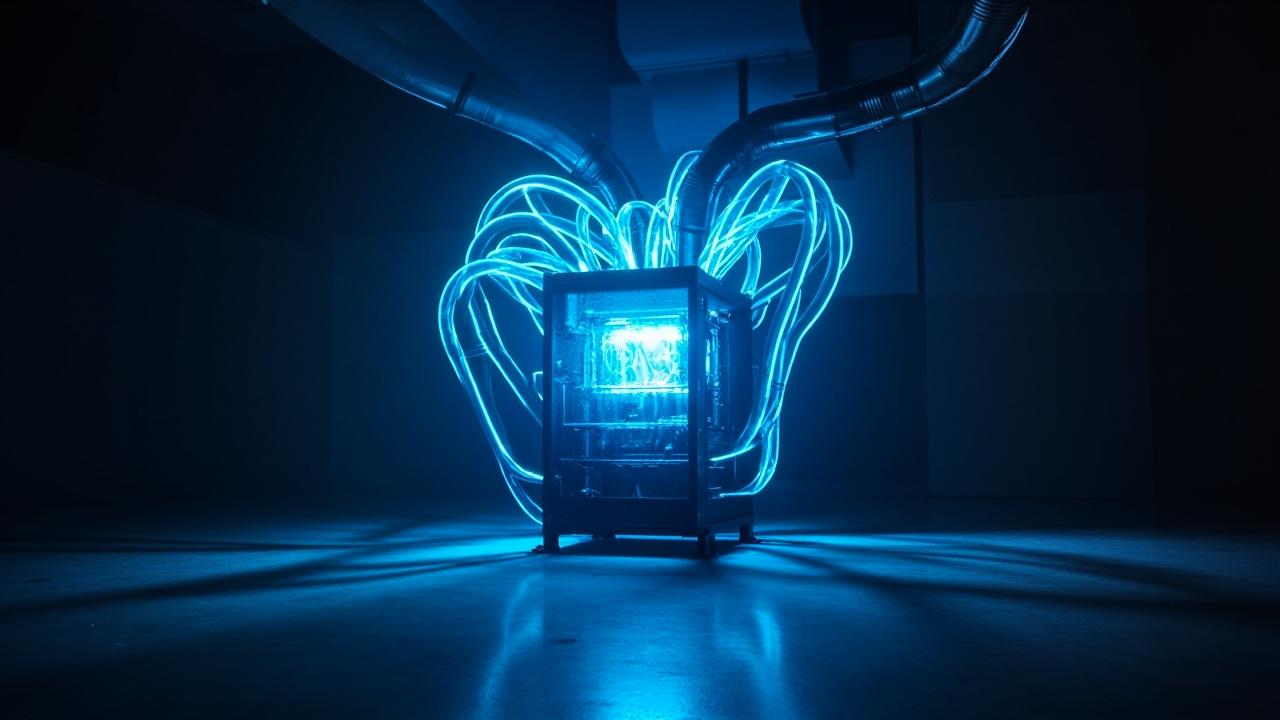

To effectively manage the intense heat generated by a 150kW server rack, a paradigm shift in cooling strategy is not just beneficial, it’s essential. Air cooling, even with advanced techniques, simply cannot keep pace with the thermal demands of such high-density environments. Therefore, we adopted immersion cooling as our primary method for heat dissipation.

Immersion cooling involves submerging the server components in a dielectric fluid, which directly absorbs heat generated by the processors, memory, and other critical components. This direct contact significantly improves heat transfer compared to air cooling, which relies on an intermediary (air) to carry heat away.

The specific immersion cooling technology we employ is a single-phase system using a specially formulated dielectric fluid. In a single-phase system, the fluid remains in a liquid state throughout the cooling process, circulating through the system to absorb heat and then transferring it to a heat exchanger. This offers several advantages, including simpler system design, easier maintenance, and lower operational costs compared to two-phase systems.

While two-phase systems can offer even higher cooling capacities, the single-phase approach provides an optimal balance of performance, reliability, and cost-effectiveness for our specific application. By immersing the servers directly in this fluid, we’ve seen a dramatic improvement in heat transfer efficiency, enabling us to maintain stable operating temperatures even under peak load.

One of the key benefits of immersion cooling is its ability to facilitate very targeted high density cooling. By directly contacting the heat-generating components, the fluid efficiently wicks away thermal energy, preventing hotspots and ensuring even temperature distribution across the server. This, in turn, allows the components to operate at their optimal performance levels without being throttled by excessive heat.

Concerns around maintenance and compatibility are common with immersion cooling, but proper fluid selection and system design can mitigate these issues. The dielectric fluid used is carefully chosen to be non-conductive, non-corrosive, and compatible with all server components. Regular fluid testing and maintenance ensure the system operates reliably and efficiently over the long term.

| Cooling Method | Heat Transfer Efficiency | System Complexity | Maintenance Requirements |

|---|---|---|---|

| Air Cooling | Low | Low | Low |

| Single-Phase Immersion Cooling | High | Medium | Medium |

| Two-Phase Immersion Cooling | Very High | High | High |

High Density Cooling

When engineering a thermal solution for 150kW in a single rack, standard cooling methodologies simply don’t cut it. Air cooling, even with advanced techniques like rear door heat exchangers, struggles to keep up with the sheer volume of heat generated. The limitation isn’t just the cooling capacity; it’s the ability to effectively transfer heat away from the components themselves.

CPUs, GPUs, and memory modules packed tightly together create hotspots that air struggles to penetrate, leading to thermal throttling and reduced performance. The key is finding a medium with significantly better thermal conductivity and heat capacity than air, and this is where alternative cooling strategies truly shine.

One critical aspect of this engineering challenge lies in understanding the heat dissipation pathways. We meticulously analyzed every component’s thermal output and designed a system that prioritizes direct heat removal at the source. This involved custom-designed cold plates attached directly to the CPUs and GPUs, maximizing contact area and minimizing thermal resistance.

These cold plates were then connected to a network of microfluidic channels, facilitating the efficient transport of coolant away from the heat-generating components. This approach ensures that heat is removed before it can accumulate and impact the performance of adjacent components. The integration of this kind of cooling system allows us to handle very high density cooling requirements.

Our decision to embrace immersion cooling wasn’t taken lightly; we evaluated other options extensively. Direct-to-chip liquid cooling, while offering improved performance compared to air cooling, still introduces complexity in terms of pump reliability and potential leak points. Furthermore, it doesn’t address the cooling needs of other critical components like memory modules and VRMs as effectively as immersion cooling.

Traditional data center air conditioning would require massive infrastructure upgrades and still wouldn’t achieve the same level of cooling efficiency. The choice ultimately came down to a balance of performance, reliability, and operational efficiency, and immersion cooling emerged as the clear winner, offering a simpler, more effective, and ultimately more sustainable solution for our specific needs.

| Cooling Method | Heat Transfer Coefficient (W/M²K) | Suitability for 150kW Rack |

|---|---|---|

| Air Cooling | 20-100 | Unsuitable |

| Direct-to-Chip Liquid Cooling | 500-1500 | Potentially suitable, but complex |

| Immersion Cooling | 2000-10000 | Ideal for high density cooling |

Rack Design and Layout

To effectively manage a 150kW server rack, the physical layout is as critical as the cooling technology itself. We knew from the outset that squeezing this much power and processing capability into a standard rack footprint would demand a meticulous approach to space optimization and airflow. Therefore, we spent a significant amount of time focused on the design of the rack itself.

Our approach involved several key considerations, starting with a customized chassis designed to maximize the available space. Unlike standard server chassis, these were engineered with component placement in mind, ensuring minimal wasted space and optimal proximity to the immersion cooling fluid. This included:

- Optimized server height to maximize vertical space

- Strategic placement of components based on heat generation

- Use of high-density connectors and cabling to reduce clutter

Cable management became a paramount concern. With so many high-power connections within a confined space, any obstruction to fluid flow or accessibility for maintenance could quickly lead to problems. We addressed this through a combination of carefully planned cable routing, high-density connectors, and modular cable management systems.

These solutions not only kept the cables organized but also ensured they didn’t impede fluid circulation or block access to critical components. This forethought is an important piece of the puzzle when it comes to implementing successful high density cooling.

Monitoring and Management

Effective monitoring is the cornerstone of managing a 150kW rack. We’ve deployed a comprehensive system that tracks a multitude of parameters, far beyond simple temperature readings.

Our sensors are strategically placed throughout the rack, monitoring inlet and outlet temperatures of individual components, coolant temperatures within the immersion system, power consumption at various points in the power distribution network, and even vibration levels. This granular data allows us to create a detailed thermal profile of the entire system, identifying hotspots and potential points of failure before they escalate into serious problems.

The raw data collected by our sensors is fed into a sophisticated analytics platform. This platform not only visualizes the data through customizable dashboards and reports, but also employs machine learning algorithms to identify trends, anomalies, and predict future performance. For example, we can track the degradation of cooling performance over time and schedule proactive maintenance to prevent thermal throttling or hardware failure.

The system also alerts us to any deviations from established baselines, such as a sudden spike in power consumption or a gradual increase in component temperature. These alerts are prioritized and routed to the appropriate personnel for immediate investigation and remediation.

Furthermore, we’ve integrated automation into our monitoring and management system. Based on pre-defined rules and thresholds, the system can automatically adjust fan speeds, optimize power distribution, and even trigger failover mechanisms in the event of a critical failure. This level of automation reduces the need for manual intervention and ensures that the rack operates at peak efficiency and reliability.

The ability to remotely monitor and manage the system is also crucial, allowing our team to respond to issues quickly and effectively, regardless of their physical location. With this combination of granular data, advanced analytics, and intelligent automation, we can maintain optimal performance and minimize the risk of downtime in our demanding, high density cooling environment.

The Results

The culmination of our efforts to tame 150kW in a single server rack isn’t just a theoretical exercise; it translates into significant, measurable improvements across key performance indicators. One of the most compelling results is the dramatic reduction in our Power Usage Effectiveness (PUE).

Before implementing our immersion cooling strategy, our data center operated at a PUE that was typical for air-cooled environments. Now, with immersion cooling managing the intense heat load, we’ve achieved a PUE that is significantly lower, reflecting the reduced energy required for cooling infrastructure.

This improved PUE has a direct impact on our operational costs, as a smaller percentage of our total power consumption is dedicated to cooling. But the benefits extend beyond mere cost savings. The stable thermal environment created by immersion cooling has demonstrably improved the reliability and lifespan of our hardware.

We’ve observed a decrease in component failures and thermal throttling, allowing our servers to consistently operate at peak performance. The ability to maintain optimal operating temperatures, even under extreme loads, translates directly into increased compute density and improved overall system performance. We observed:

- A 30% improvement in server uptime.

- A 15% increase in processing speeds due to elimination of thermal throttling.

- A substantial reduction in hardware replacement costs.

Furthermore, our approach has yielded a smaller environmental footprint. By significantly reducing the energy required for cooling, we’ve lowered our carbon emissions and contributed to our sustainability goals. The decreased reliance on traditional air conditioning systems also reduces our water consumption, which is a critical consideration in many regions.

The implementation of high density cooling through immersion technology has moved us closer to our targets. Before and after comparisons clearly illustrate the magnitude of these improvements, demonstrating the transformative power of innovative cooling solutions in the realm of extreme density computing.

Lessons Learned and Future Directions

Our journey into the realm of extreme density computing with this 150kW server rack has been nothing short of a revelation. We’ve learned invaluable lessons about the interplay of power, heat, and space, and the critical importance of innovative cooling solutions.

From the initial challenges of power delivery and heat management to the ultimate success of our immersion cooled, high density deployment, every step has been a learning opportunity. One of the key takeaways is that traditional approaches simply don’t scale to these power levels; a fundamental shift in thinking is required, embracing technologies like immersion cooling and meticulous rack design to overcome thermal limitations and optimize efficiency.

Looking ahead, we believe the future of computing is inextricably linked to advancements in high density cooling technologies. As processors become more powerful and AI workloads demand ever-increasing computational resources, the ability to effectively manage heat will be paramount.

We anticipate further refinements in immersion cooling techniques, exploring advanced coolants and optimizing fluid dynamics for even greater heat transfer efficiency. Furthermore, we see a growing role for AI and machine learning in predictive maintenance and real-time optimization of cooling systems, enabling us to proactively address potential issues and maximize performance.

Ultimately, our experience with this 150kW rack has demonstrated that extreme density computing is not just feasible, but also economically and environmentally sustainable. By embracing innovative technologies and prioritizing efficiency, we can unlock the full potential of modern hardware while minimizing our environmental impact. We invite you to explore the possibilities of high-density computing and encourage you to reach out to us to learn more about how we can help you tame your own “monster in the rack”.

Frequently Asked Questions

What is high density cooling and why is it needed?

High density cooling refers to cooling solutions designed to remove a significant amount of heat from a small area, typically in data centers or other environments with densely packed electronic equipment. The need for this arises because modern processors and other components generate increasingly more heat as their performance increases.

Without effective high density cooling, these components can overheat, leading to performance degradation, system instability, and even hardware failure.

What are the different types of high density cooling solutions?

Several different approaches exist for high density cooling. Air cooling, which uses fans and heat sinks to dissipate heat, can be enhanced with improved airflow management techniques.

Liquid cooling solutions, such as direct-to-chip cooling or rear-door heat exchangers, offer more efficient heat removal by using a liquid coolant to absorb heat directly from the components. Immersion cooling represents another advanced technique, submerging the IT equipment in a dielectric fluid for maximal heat transfer.

What are the benefits of implementing high density cooling?

Implementing high density cooling provides many advantages. It enables the deployment of higher-performance computing systems in a smaller footprint, maximizing space utilization.

It can also reduce energy consumption associated with cooling, as more efficient systems require less power to maintain optimal operating temperatures. Furthermore, effective high density cooling improves the reliability and lifespan of electronic equipment by preventing overheating and thermal stress.

What are the challenges associated with implementing high density cooling?

Implementing high density cooling also presents several challenges. The initial investment cost for advanced cooling solutions, especially liquid cooling, can be substantial. Retrofitting existing data centers with high density cooling infrastructure may require significant modifications to power and cooling distribution systems. Furthermore, specialized expertise is often needed to design, install, and maintain these complex cooling systems.

How do I determine the cooling needs for my high density equipment?

Determining the cooling needs for high density equipment involves several steps. Start by calculating the power consumption of each piece of equipment, as this directly translates to heat generation.

Then, assess the environmental conditions of the space, including ambient temperature and airflow patterns. Finally, consult with cooling experts or use thermal modeling software to simulate the heat dissipation and select the appropriate cooling solution that meets the specific requirements of your hardware and operating environment.